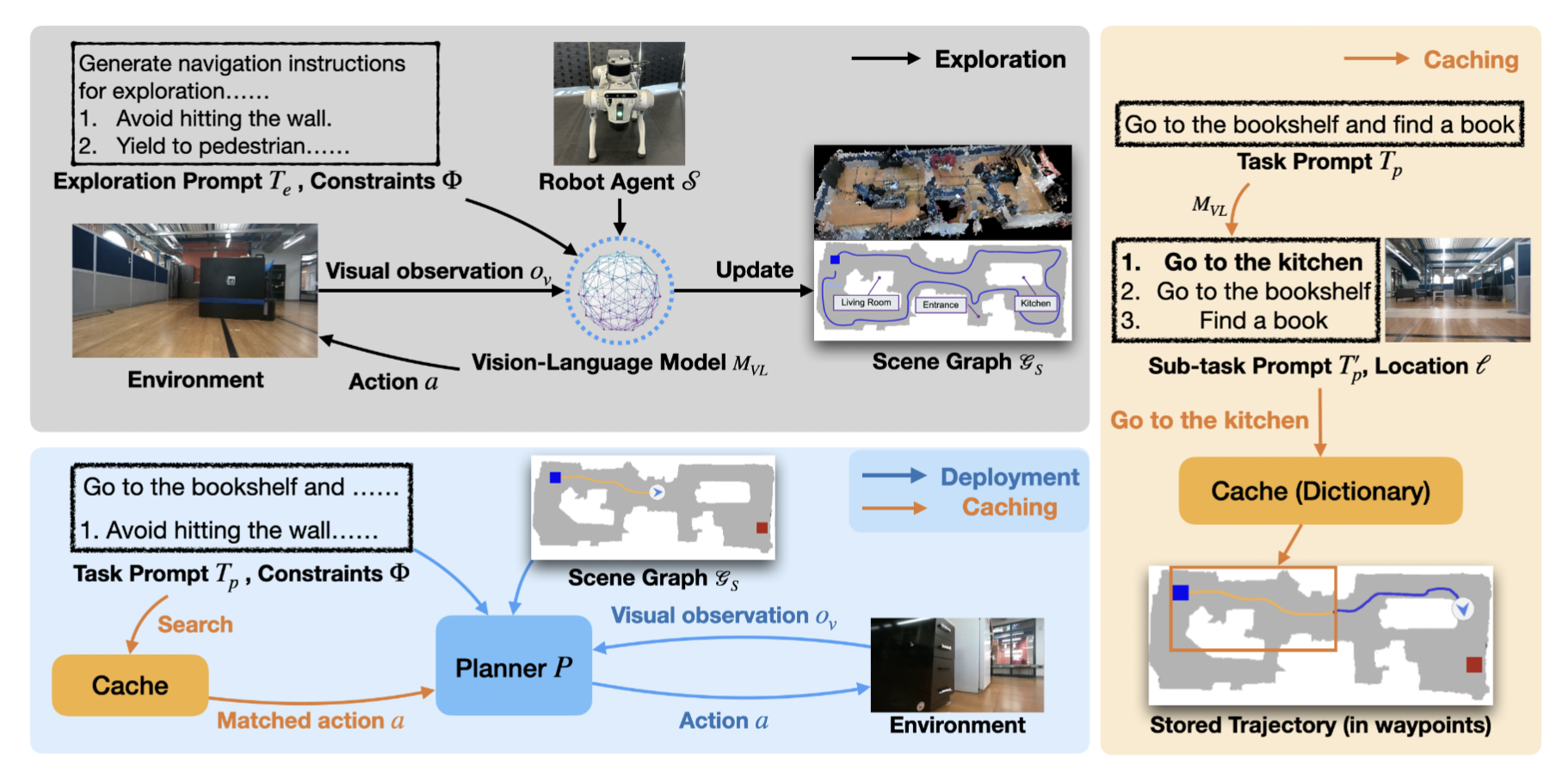

Framework Overview

Overview of VLN-Zero. The framework consists of two main phases: exploration and deployment. In the exploration phase (gray), the VLM guides a robot agent to interact with the environment under user-specified constraints, producing both a action at each step and ultimately yielding a scene graph (top-down map). In the deployment phase (blue), a planner leverages the generated scene graph, visual observations, and constraints to generate constraint-satisfying actions for a task prompt. During execution, a caching module further accelerates execution by reusing previously validated trajectories via compositional task decomposition.